The Artist and the Algorithm

A cartography of Artificial Intelligence

This text is a collection of suggestions that have contributed to delineate the critical-problematic framework within Re:Humanism, an exhibition born and developed as a separate organ. Before starting to frame the reasons of this reflection that, distant from the current trend, focuses on delineating potential paths, visions and purposes, it is necessary to go through a small digression on the history and development of AI technologies.

What some of us wrongly define as the future was actually originated about seventy years ago, together with the developments in the biological domain on the human brain function and in particular on learning structures, regulated by processes for which neurons’ connections are strengthened through the frequency of communications. Already in 1943, the first papers published by neuroscientists Warren McCulloch and Walter Pitts showed how this system could be artificially replicated by shifting the computational paradigm from the processing and computational capacity to the generative one, led to learn from the recurrence of data paths. This system was named evolutionist.

But the creation of a machine, capable of simulating neurons’ behavior, was attributed in 1956 to Frank Rosenblatt, psychologist at Cornell University. The Mark I Perceptron was a gigantic computer that occupied a whole room and was able to perform minimal tasks. After that, Rosenblatt found himself forced to juggle between the search for funding (that would allow him to carry out complex researches) and the obstructionism of those who represented the downside, the supporters of a symbolic artificial system, based on a creationist logic according to which the processing potentials depend on the rules provided top-down by men. This second theory, much more widespread for several years afterwards, was reversed only in the last decade thanks to the work of personalities such as Yann LeCun, who later became director of Facebook AI Research and Geoffrey Hinton, now head of Google research, who resumed Rosenblatt’s work and rehabilitated the theories on the neural network, at the base of the most recent AI developments.

Re: Humanism Art Prize # 1 Edition, installation overview, 2019, AlbumArte, Rome. Photo by Giorgio Benni. Courtesy Alan Advantage

From this brief historical survey, it is clear that the current configuration of artificial intelligence algorithms brings into play a whole series of instances linked to the relationship between man and progress; while on one hand there are those who still believe that any form of elaboration by an artificial intelligence cannot be separated from human regulation, on the other hand the bold attempt to interpret the future is driven by the curiosity and concern that the technological singularity era inevitably brings in.

Within artificial intelligence investigations, we can highlight two macro-areas. On the one side, what I call “Human.” This area represents for me all those scenarios related with robots and humanoids that, starting from the cinematic imaginations, from Fritz Lang onwards, sees artificial intelligence as the possibility of a new form of existence, capable more and more of emulating human behaviors and, in the most extreme scenarios, even replacing them.

Moreover, there is another macro area of artificial intelligence that I define in this discussion as “Algorithmic,” not because the former is not, but in an attempt to group all those forms of artificial intelligence which, far from assuming an anthropomorphic dimension, are now rooted in our life experience as ways of usage (think of devices like Alexa, the various voice assistants, facial recognition and the predictive forms that interact with our choices in various ways). Both delimited areas are significant knots and lead back to different thematization which contribute on different levels to fuel the debate on the impact of artificial intelligence in the present, even before the future. We will try to bring the discussion back to some research areas to demonstrate the diversification of these reflections.

When we think about artificial intelligence, our memory goes inevitably to all those forms of cinematographic and literary representations, that for my generation, preceding the Millennials and witnessing the great revolution of the introduction of computers and smartphones in their homes, hence delimiting a before and after. Represented in cult images, such as the great red eye of HAL in Stanley Kubrick’s 2001: A Space Odyssey, or the replicants of Ridley Scott’s Blade Runner, as well as the artificial child of Steven Spielberg’s A.I. Artificial Intelligence, the narration of a superhuman and anthropologically connoted intelligence capable of feeling, making decisions and fighting for its independence contributed to construct an image of artificial intelligence that has led us to problematize the boundary, ever more opaque between human and artificial in a vision, at times dystopic, at times emphatic (see the recent developments of the transhumanist theories) in which the ability to redefine and reterritorialize what is properly human is fundamental.

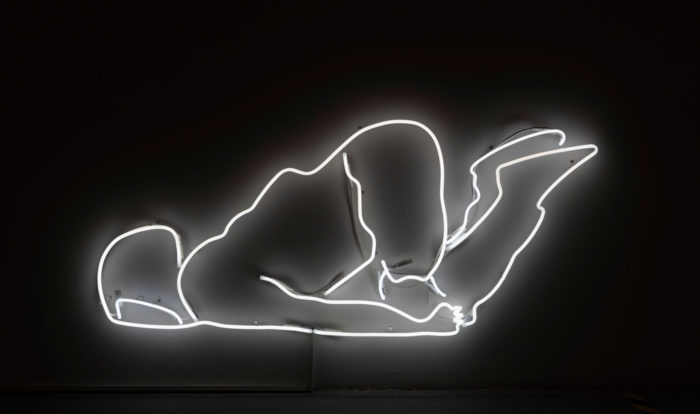

Giang Hoang Nguyen, The Fall, performance and multimedia installation (mattress, neon, headphones), audio 12’, 2018, AlbumArte, Rome. Photo by Giorgio Benni. Courtesy Alan Advantage

According to Swedish physicist and author of Life 3.0. Being Human in the Age of Artificial Intelligence, Max Tegmark, the human being has radically changed his life experience, moving from a 1.0 phase—the biological one, to a 2.0 cultural one, consisting on the possibility of designing his own “software”, to the 3.0 one in which also the hardware that is the body can be modified. The complexity shaping identity together with the technological progress makes the spectrum of this investigation even wider. Since the seventies, several artistic experiments have explored the body and its practices also through the technological grafting: this is the case of the famous interventions by Stelarc, Jana Sterbak or Marcel•lí Antúnez Roca.

As Teresa Macrì wrote in her 1996 essay The postorganic body “The body under construction is a fantastic hybridization between organic and inorganic, between particulate matter and silicon chips. What the present suggests to us is a body with multiple contaminations and unpredictable functions. These alterations, that the body meets, oust its identity and redefine a mutant subjectivity.”

Lorem, Adversarial Feelings: 1-5: Latent Selves, looped audio-visual installation, 22 ’21”, 2019, AlbumArte, Rome. Courtesy by the artist

Therefore, we have moved from the dimension of everything is possible to a sophisticated reality, where man is called to reinvent himself in the light of scientific developments and advancing technologies. It is not surprising at this point how some exponents of the Transhumanist current see in the overcoming of human body and in the possibility of implanting memory in complex technological devices, not only a form of progress, but even the possible overcoming of the ultimate limit: death.

Precisely because of this dialectical relationship with the end, it is possible to read a phenomenon like the one of the Uncanny Valley, the research’s outcome by a robotics academic Masahiro Mori, who published in 1970 (in the scientific journal Energy) a discussion that warned against the risks that the excessive humanization of robots involved. During his research on the interaction between man and automaton, Mori discovered that, after an initial phase of recognition and appreciation, as the human likeness of the machine increases, its user receives unpleasant feelings of repulsion and disturbance: we are facing the uncanny. Apparently this feeling derives from recognizing the human limitations in the other, measuring itself with its own mortality within each change of perception.

Enrico Boccioletti, devenir-fantôme, sound installation and choreographed space, 2019, AlbumArte, Rome. Photo by Giorgio Benni. Courtesy Alan Advantage

By reversing the perspective, there are those who have seen in the hybridization between man and machine a claim for a new and strengthened identity, capable of incorporating the various instances of gender. In 1991, in the essay Simians, Cyborgs and Women – The Reinvention of Nature, the philosopher and feminist Donna Haraway delves into the political and narrative dimension of the cyborg and writes: “From a child of a capitalist and military culture to a new human chimera, a hybrid of organism and machine that is nothing more than ‘our ontology.’” In this way Haraway overturns the point of view and opens up to a whole series of reflections that look at the coexistence of human and artificial, as long as we can look at identity as something fluid and sensitive to changes with the involvement in technological progress.

Various authors have worked on the coexistence of man and artificial intelligence, evidence of this multiple narrative and cinematographic universes, today fed by the production of numerous TV series. In Humans (2015) the humanoids are devices marketed in order to carry out the most humble and repetitive work, in Westworld, (2016) the same animate a theme park and in Altered Carbon (2018) human memory becomes transportable, through a cortical implant, from one body to another, allowing to overcome the limit of physical death.

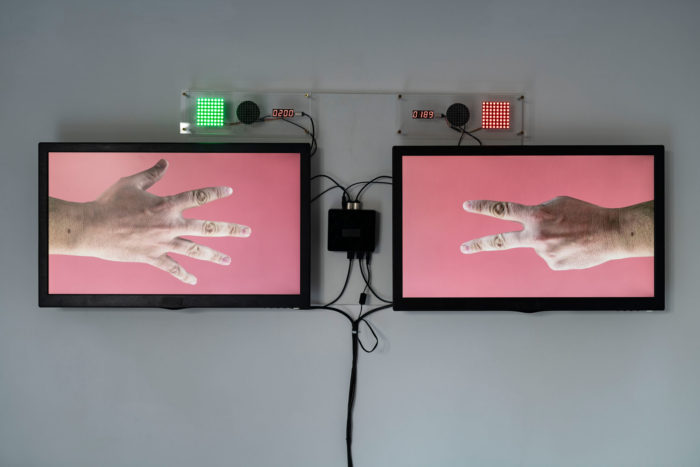

Daniele Spanò, Urge Oggi, multimedia installation, 2019, AlbumArte, Rome. Photo by Giorgio Benni. Courtesy Alan Advantage

Wanting to push the reflection a little further, we need to ask ourselves what human and artificial dynamics will be able to relate to in the future. In fact, if artificial intelligence will know in a not-so-distant future, not just how to emulate human behavior and feelings but also how to develop an autonomous language, how will we define these new forms of existence? What rights and values will be attributed to it? Still assuming that we can talk about shared values.

In 2018’s The Technological Singularity cognitive robotics professor Murray Shanahan imagines what could be the developments of a world in which work in its less gratifying prerogatives is entrusted to machines and states: “The AI have no life beyond work and are constantly threatened with death if they operate inadequately.” Related to a human experience appears brutal to us, all this could represent our relational model with respect to an automaton, meaning a device devoid of conscience and therefore subjected to pure emancipation from work. However, if the sophistication degree would be able to predict the development of an artificial consciousness, this would also entail the machine’s ability to experience pain and suffering, with a consequent development in man of a level of empathy that is difficult to define.

If so far we have explored some of the instances relating to the relationship man-machine, we now need to flip the coin, and explore all those intelligent forms that mark our present rather than belonging to a future projection. I refer to all the algorithms that populate our daily lives at various stages of progress, accompanying us, in the case of smartphones, in our movements, in our working environments and inevitably in our homes.

Antonio “Creo” Daniele, Grammar # 1, interactive installation: tablet, dataset, 300 drawings, 12.5 x 12.5 cm each, 2019, AlbumArte, Rome. Photo by Giorgio Benni. Courtesy Alan Advantage

Terms like machine learning, deep learning, image recognition, chatbot, voice assistants are no longer a mystery to us, and even less are Facebook, Google, Amazon, Netflix or other similar platforms; the former are characterized as some of the best known AI applications, the latter are among the largest service providers to whom we refer every day when orienting our tastes, choices and purchases. Seen from this perspective, artificial intelligence appears much closer to us and perhaps for someone less attractive, but it is precisely from here that we should start to investigate the developments of a progress that will radically change the way in which we relate to things. When we try to limit the discussion to this dimension made up of mathematical formulas and progress bars, smartphone and smartwatch applications and facial recognition, other significant instances find their way. First of all, the need to understand our relationship with sensitive data. From geolocation to conversations, from user preferences to purchases, up to health, every day voluntarily (and involuntarily) our digital identity is written in the ether and analyzed, partly to offer us increasingly profiled experiences but also to influence our choices in political information and cultural taste. This identity splitting is inherent to our perception and understanding its nature helps us to better react to its future developments.

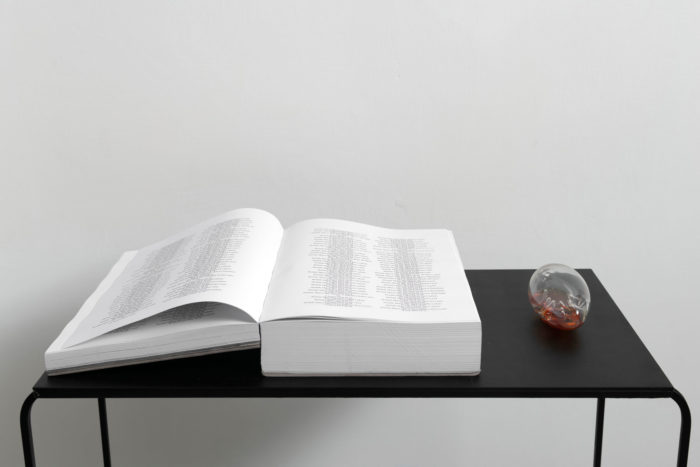

Michele Tiberio in collaboration with Diletta Tonatto, Me, My scent, publication 1619 pages, perfume, 2019, AlbumArte, Rome. Photo by Giorgio Benni. Courtesy Alan Advantage

Another great reflection concerning the artificial intelligence era is linked to its ability to automate processes and thus replace man in many working environments. Nowadays automation represents perhaps one of the major concerns related to the production system: while on the one hand it is able to increase efficiency and free us from mechanical and repetitive tasks, on the other hand it will represent, at least in its early stages, a problem of relocation of resources and a consequent loss of jobs. Many have pointed out that such technological revolutions are not new to the history of progress—the most significant was the industrial one. Even then, the industrial movement had brought a radical (not painless) change in the way in which work and economy were conceived, but the current society shows that the productive classes were able to reorganize and compensate for the loss of tasks and competences.

Already in 1956, the German sociologist and philosopher Friedrich Pollock had published an essay unequivocally entitled Automation. In his text Pollock (one of the main exponents of the Frankfurt School with Adorno and Horkheimer) hopes for a “long-term planned program with the help of new methods” in order to rethink the working dimension in the age of automation. Among the most interesting theories that accompany the automation era, there are those that see in it the possibility of totally freeing man from the dimension of work, thus trying to redefine the space of experience and the identity on the basis of a system founded on the re-appropriation of free time.

Guido Segni, Demand full laziness today, looping video installation, 7 ’41”, flags, 2019 (2018-2023), AlbumArte, Rome. Photo by Giorgio Benni. Courtesy Alan Advantage

Nick Srnicek and Alex Williams have gone further ahead and, in Inventing the future (2015), imagine a universe in which full automation is able to meet the need of well-being, offering man the opportunity to redefine his own significant spaces in the light of concepts such as idleness, creativity and rest. At the sound of the slogans “Demand full automation, claim the future, demand universal income”, the economist and the political theorist lay the foundations for a new accelerationist theory whose main objective is “a utopia without ifs and buts.”

While waiting for the developments of such scenario, we cannot fail to note that a real cultural movement linked to artificial intelligence is becoming increasingly clear. If one of the possible dangers is partly a cultural flattening in which algorithms replace free exploration, generating—as we already have the opportunity to ascertain—assisted forms of navigation capable of creating artificial links between our cultural choices (is it not the case of Netflix or Spotify?), on the other hand director of the Cultural Analytics Lab in New York Lev Manovich points out: “While today AI is already automating aesthetic choices, suggesting what to look at, listen to or wear, even playing an important role in some areas of aesthetic production such as in mainstream photography (many features of modern mobile cameras use artificial intelligence), in the future it will play an increasingly important role in cultural production.”

Albert Barqué-Duran, Mario Klingemann, Marc Marzenit, My Artificial Muse, acrylic on canvas, 150 x 200 cm, looped video 8’, 2018, AlbumArte, Rome. Photo by Giorgio Benni. Courtesy Alan Advantage

Although artificial intelligence systems are able to produce their own cultural products, in the most successful cases the supervision of man is still strongly necessary, we are actually speaking of “supervised machine learning” systems. This aspect will keep on being relevant until these products will be subservient to the human cultural industry and won’t be able to generate their own results. In fact, in many different cultural fields (from music, literature, dance and visual arts) authors felt the need to explore the potential of this artificial language, either in dialogue or in autonomy with respect to human choices.

Once again as Manovich points out, AI offers us a powerful tool for the analysis of cultural systems. From 2000 onwards, the field of computer science has made great strides, allowing us to analyze quantitative models of contemporary culture using progressively larger samples. Social networks, for example, represent a very important pool of analysis to delimit areas and behaviors in the space of the dominant culture. In this sense, the external eye of the algorithm is able to give us back apparently imperceptible phenomena and to find new reference models in the cultural complexity.

Adam Basanta, A Brief History of Western Cultural Production – International Gold Standard 001, giclée print, database of paintings, 60 x 60 cm, 2019, AlbumArte, Rome. Photo by Giorgio Benni. Courtesy Alan Advantage

A final scenario that deserves to be explored is the one that considers artificial intelligence as a tool for a collective intelligence, aggregator and enhancer of experiences that only through dialogue and interpenetration of its parts are able to respond to the new demands in the fight against the technocracy bogeyman. As such, they create collaborative platforms that bring together entire communities of creatives and experts of all kinds, in search of a territory of hybridization in which man and machine collaborate in the development of sustainable ecosystems.

The first edition of Re: Humanism Art Prize was born from a conversation between the contemporary art world and a company that makes consulting in the field of innovation its creed, pictures through the ten winning projects how to hold together the different forms of reflection and interchange that the advent of artificial intelligence implies. By different means, not necessarily governed by algorithms, Giang Hoang Nguyen, Albert Barqué-Duran, Mario Klingemann and Marc Marzenit, Enrico Boccioletti, Lorem, Michele Tiberio in collaboration with Diletta Tonatto, Enrica Beccalli in collaboration with Roula Gholmieh, Guido Segni, Antonio “Creo” Daniele, Adam Basanta and Daniele Spanò give us these multiple views.